Completions

When you "execute" a Prompt, Promptmetheus compiles it into a plain text representation (string) and sends it to the inference API, where it is fed into the selected LLM. The generated Completion is then stored and streamed back to the IDE for you to inspect.

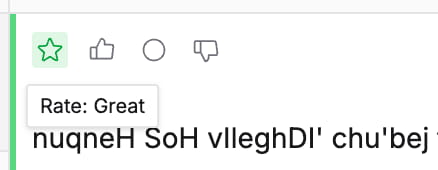

Ratings

Ratings allow you to evaluate the valence of a Completion and represent it visually via color code. Just click on a rating icon at the top-left of a Completion item to grade it:

- Great (star, green)

- Good (thumbs up, lime)

- Neutral (circle, gray)

- Bad (thumbs down, red)

There is no "correct" way of applying Ratings in terms of criteria, just experiment and figure out what works for your use case. It's an empirical metric.

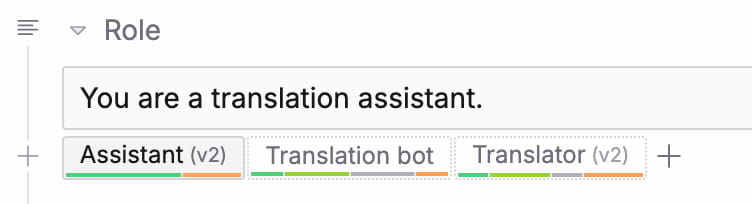

Ratings also help you gauge the performance of each Variant of a Block via inline statistics. Whenever you rate a Completion, every Variant that was used during its generation will receive that same rating and color coding. While you refine your Prompt and experiment with different Variants, rating stats will build up below each Variant tab and tell you which of them performs best.

If these inline statistics are not helpful or distracting for you, you can turn them off in the app settings.

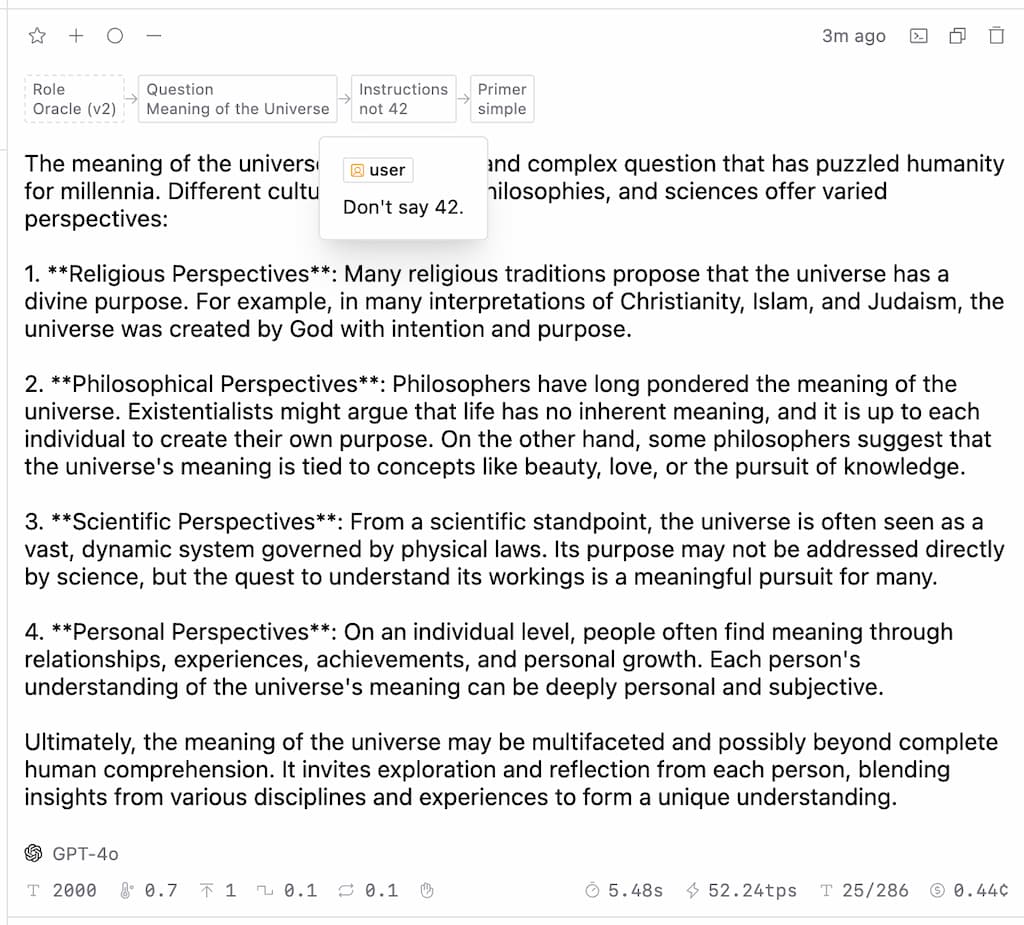

Fragments

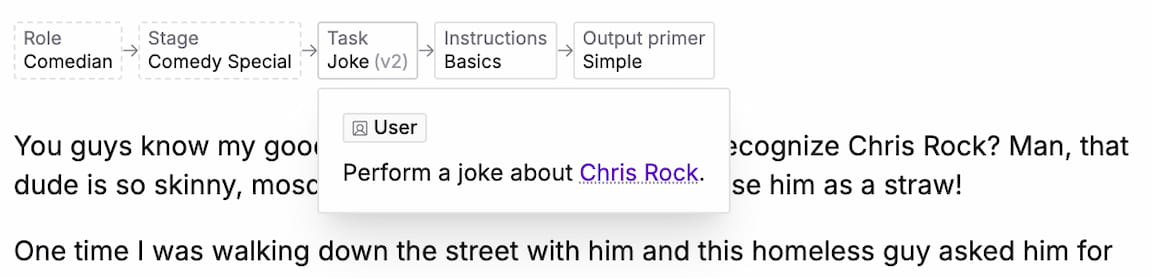

Fragments are snapshots that capture a Block's state at the time of execution, including the selected Variant (and its version) and the values of used Variables. Fragments are displayed at the top of each Completion and allow you to inspect details about the Prompt that generated it just by hovering with the cursor.

If you don't want to display Fragments with your Completions, you can turn them off in the Display Mode settings.

Evaluations

Take a look at the Evaluators section for more information on how evaluators and automatic evaluations work.

Model Settings

At the bottom left of each completion you can find the identifier of the model that was used for the completion together with the values of the selected model parameters:

...and indicators for Reasoning Effort, Thinking, Web Search Options, Seed, JSON Mode, and Stop Sequences.

Inference Details

At the bottom right you can find a selection of relevant metrics for the completion.

Completion Time (Inference Speed in tps on hover)Token Count (input/output)Completion Cost (in cents)

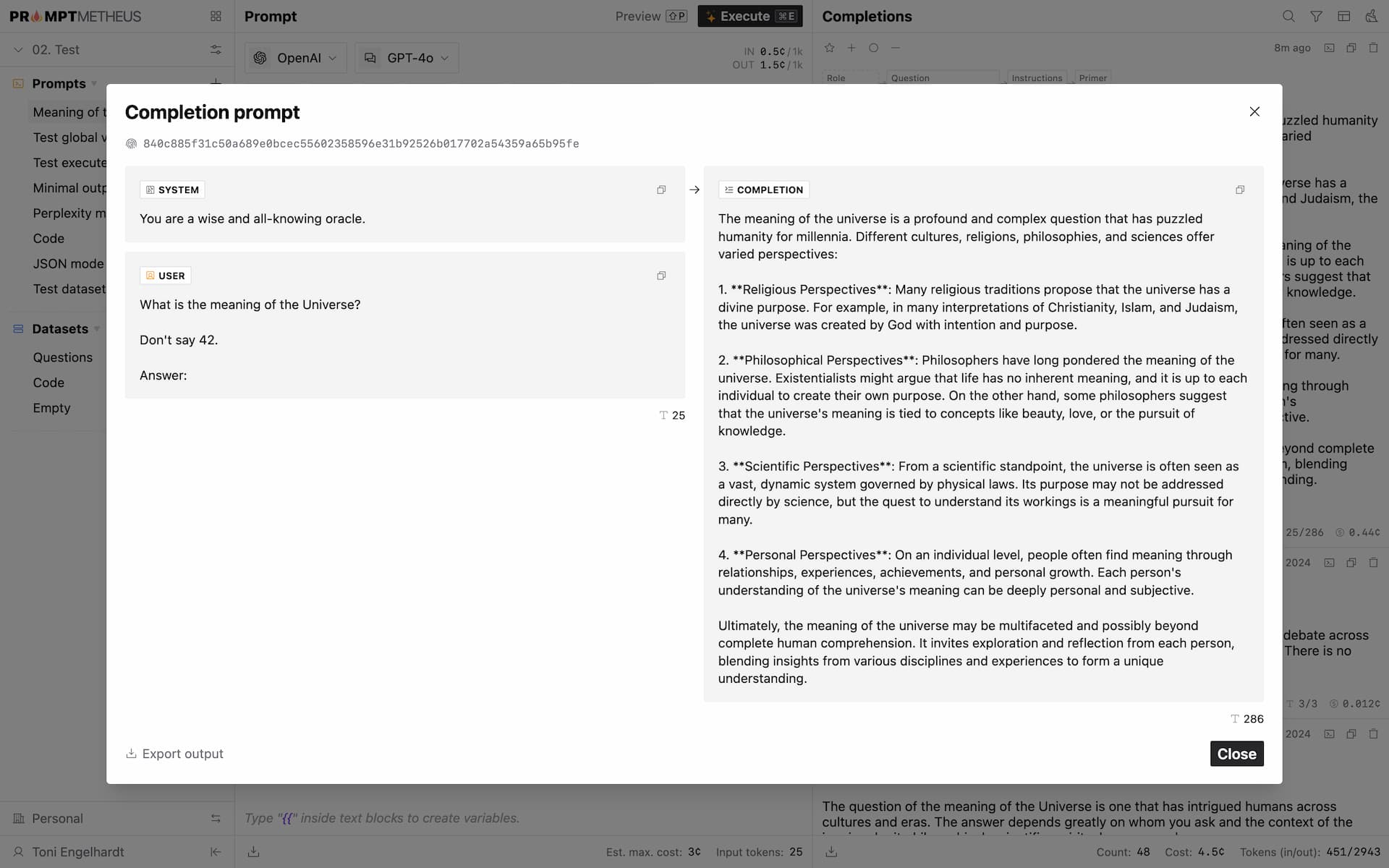

Completion Prompt

In addition to Fragments, you can also inspect the entire Prompt that was used to generate the Completion. Just click on the icon in the Completion actions to view its Fingerprint and compiled Messages.

Search and Filter

You can search completions and/or filter them by rating with the respective actions at the top-right of the screen. More filter options are in the making.

Formatting

The Completion list currently has 2 formatting options (more to come):

Text: plain text, no formatting at allMarkdown: markup formatting and syntax highlighting for code blocks

Display Mode

The Completion list has 3 different Display Modes you can choose from:

Minimal: completion content onlyCompact: content, ratings, evaluations, model settings, and inference detailsDetails: same as 2, plus fragments (default, recommended)

Export

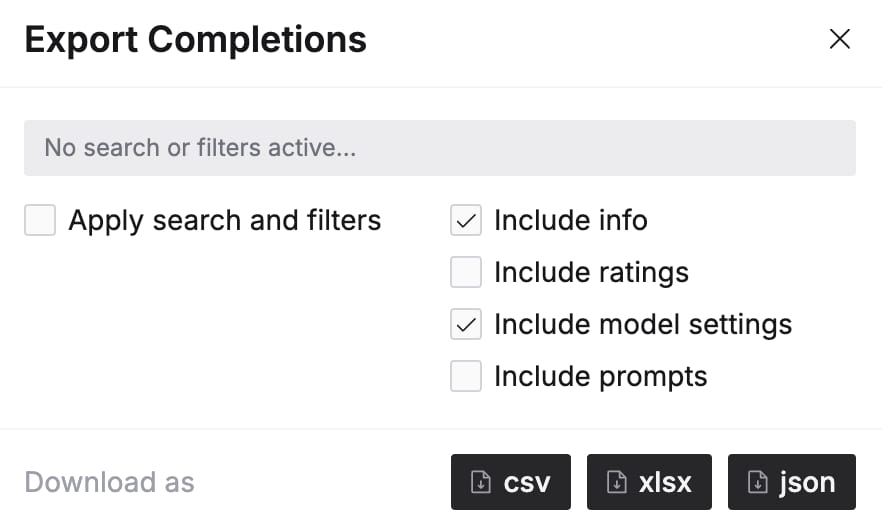

You can export Completions from the Prompt page via the menu at the top-right corner of the screen › Export completions.

Export Options

There are several options to customize your exports:

Apply search and filters

If the Completion list has active filters or a search term (see related section), you can check this option to apply the same search/filters to the export. By default, all Completions will be exported.

Include [x]

The "Include ..." options specify the properties that should be included in the export.

Export Formats

Promptmetheus currently supports Completion exports in CSV, XLSX, and JSON formats, with more to come in the future, i.a. Markdown, HTML, and PDF.