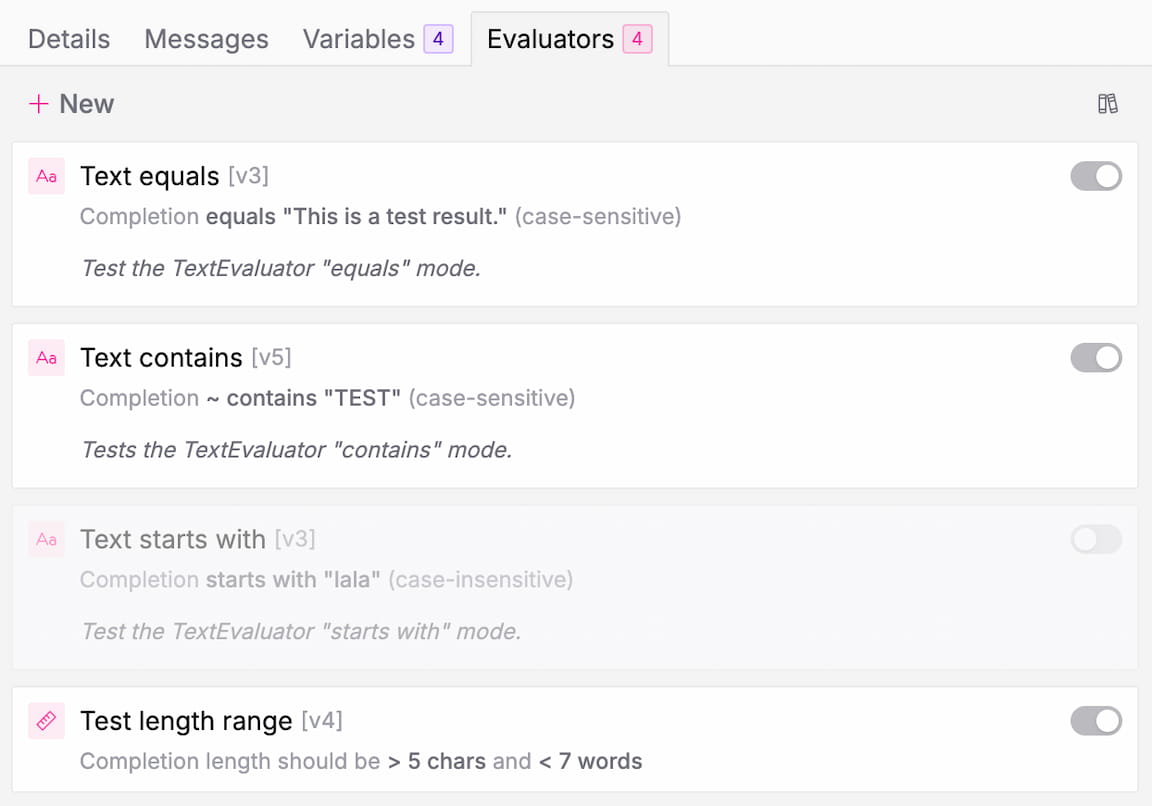

Evaluators

Evaluators automatically validate Prompt Completions against a set of user-defined rules, e.g. whether or not the Completion starts with a specific phrase, has a certain length, matches a desired format, etc.

Evaluators can be created and configured under the "Evaluators" tab on the Prompt page.

At the moment it is not possible to reuse Evaluators across different Prompts, but this is a planned feature for a future release.

Knowledge Base

Versioning (History)

Evaluators automatically keep track of their configuration history, so that it is always fully traceable which evaluators were used with which configuration for each completion.

The version history of an evaluator automatically increments if the configuration is changed and the evaluator has been executed on at least one completion. If the evaluator has never been executed, updating the configuration will not lead to the creation of a new version.

Evaluator Types

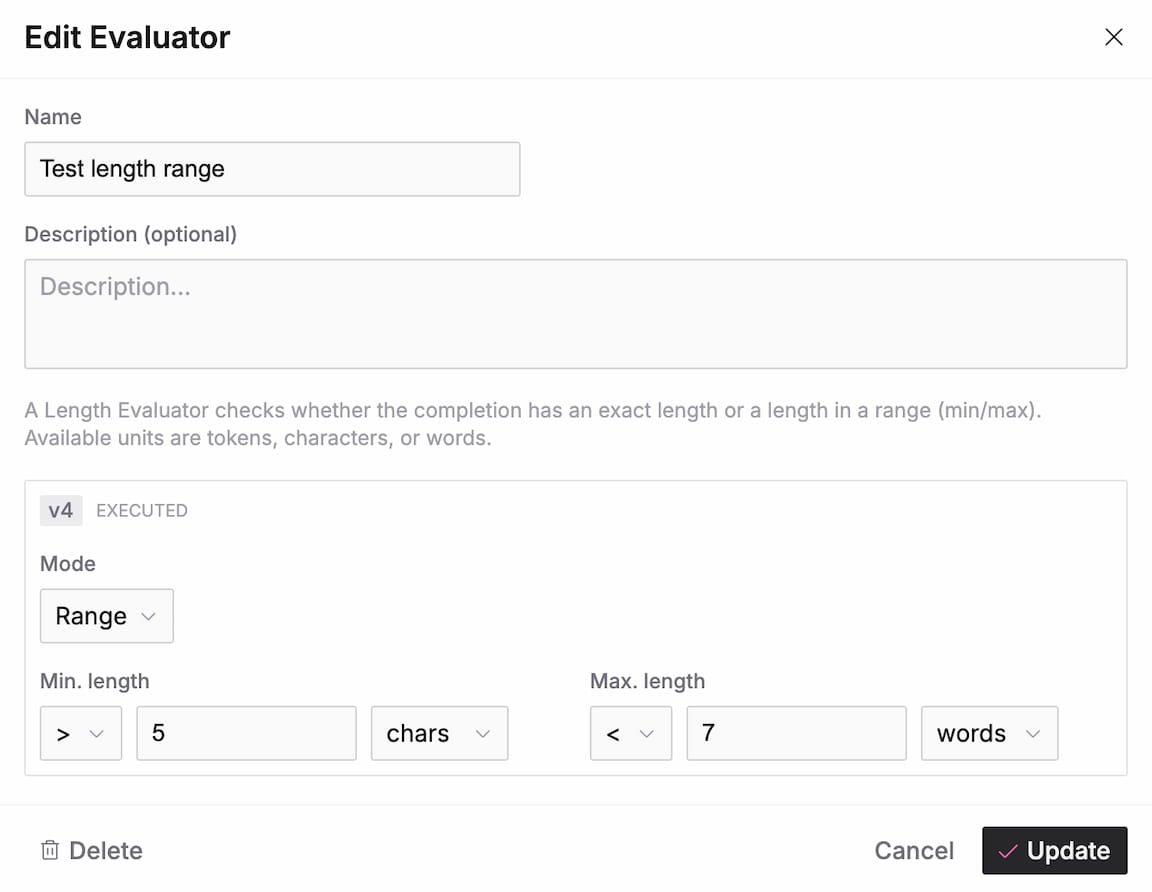

Length Evaluators

Length evaluators validate the length of prompt completions, either against an exact value or a range (min/max). The unit for the evaluation can be tokens, characters, or words, depending on preference and use case.

Regex Evaluator

🚧 Coming soon...

Boolean Evaluator

🚧 Coming soon...

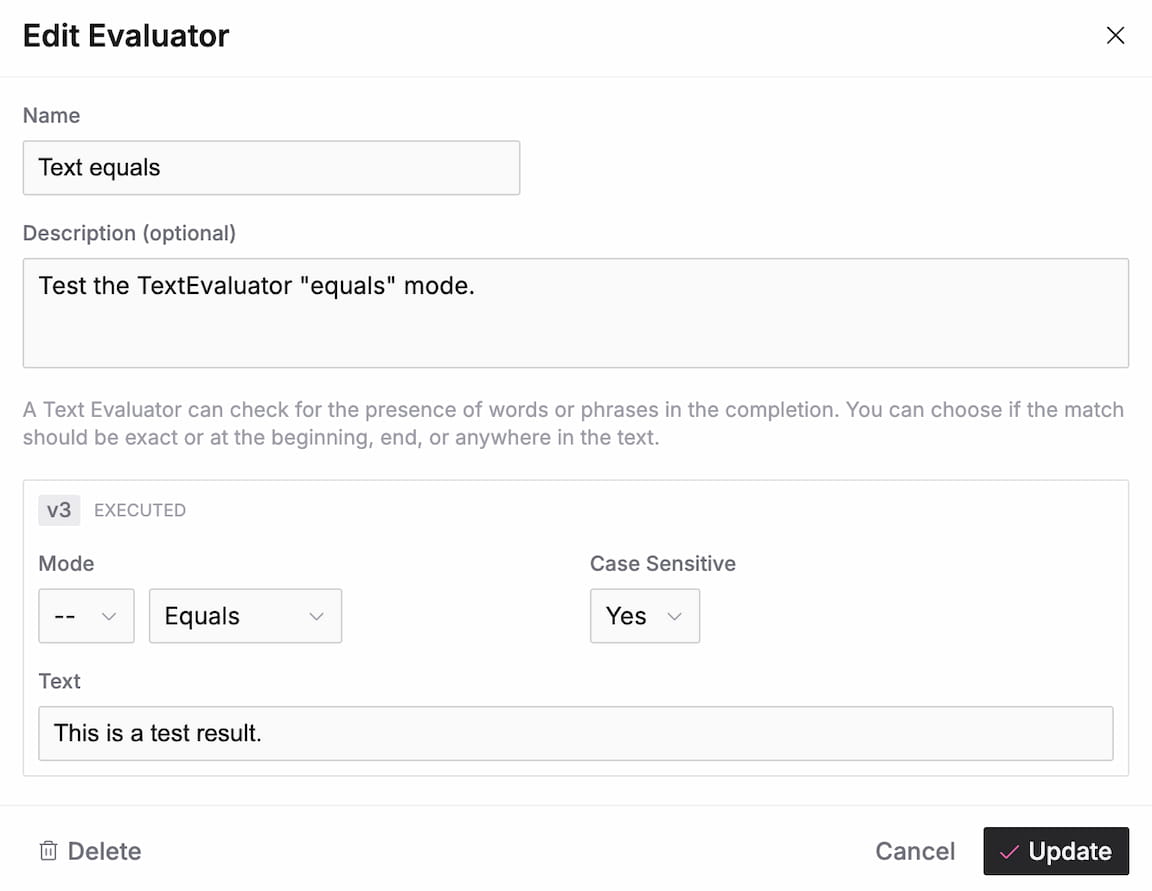

Text Evaluators

Text evaluators validate the exact match or presence/absence of a specific text string at the beginning, end, or anywhere in the prompt completion. Users can also specify whether the evaluation should be case-sensitive or not.

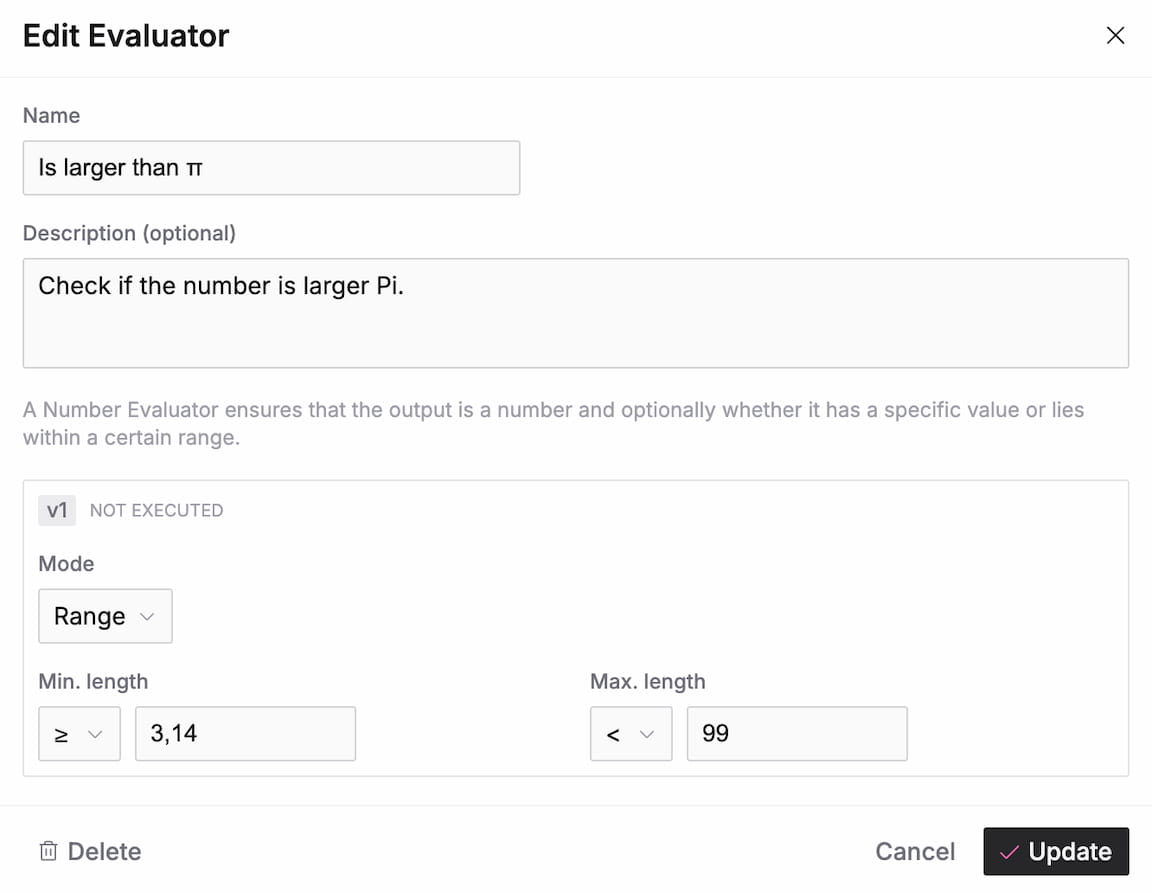

Number Evaluator

Number evaluators make sure that the completion content is a valid number, either with a specific value (exact mode) or in a certain range (min/max).

JSON Evaluator

🚧 Coming soon...

Format Evaluator

🚧 Coming soon...

LLM Evaluator

🚧 Coming soon...

Time Evaluator

🚧 Coming soon...

External Evaluator

🚧 Coming soon...